Ingress Controller

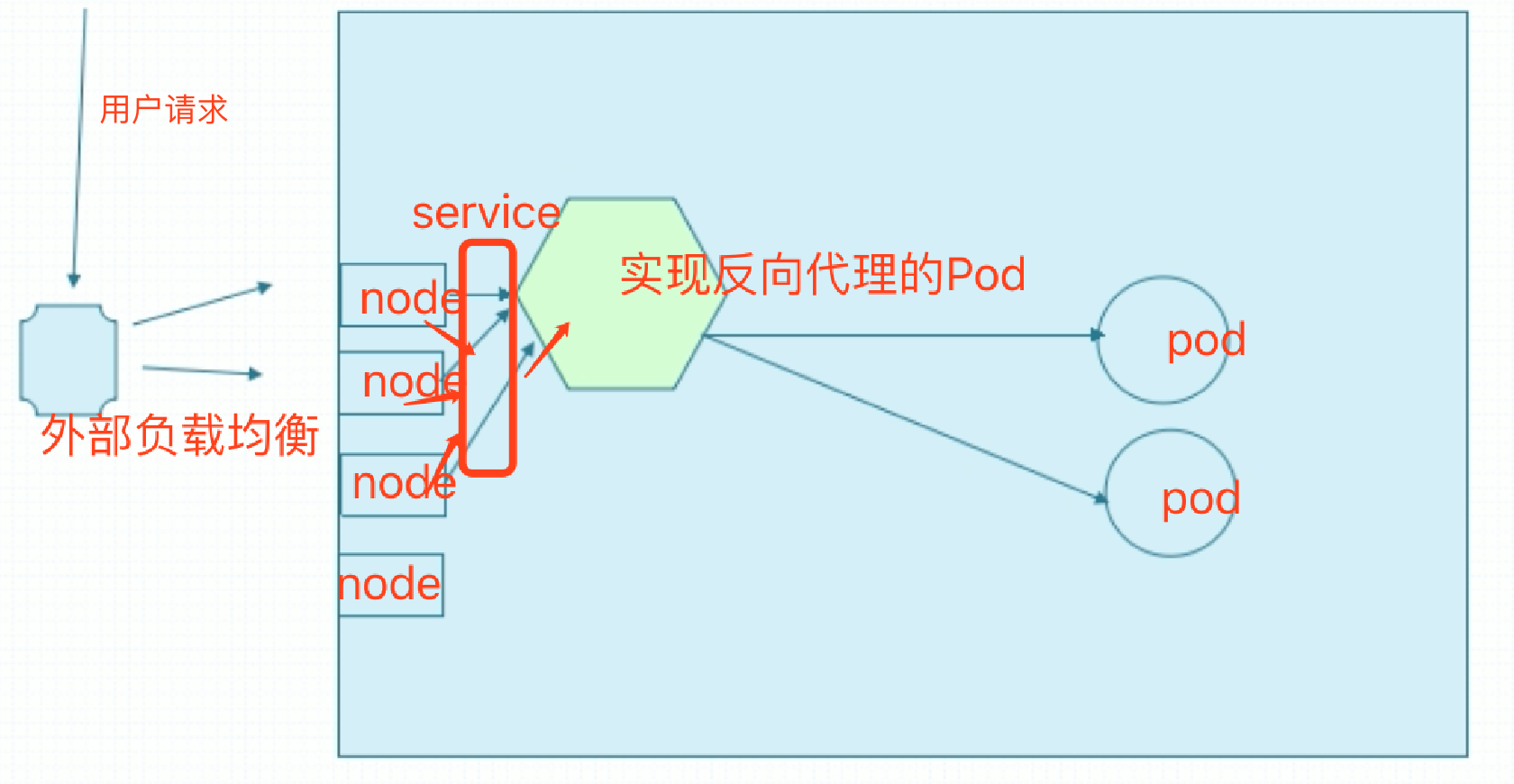

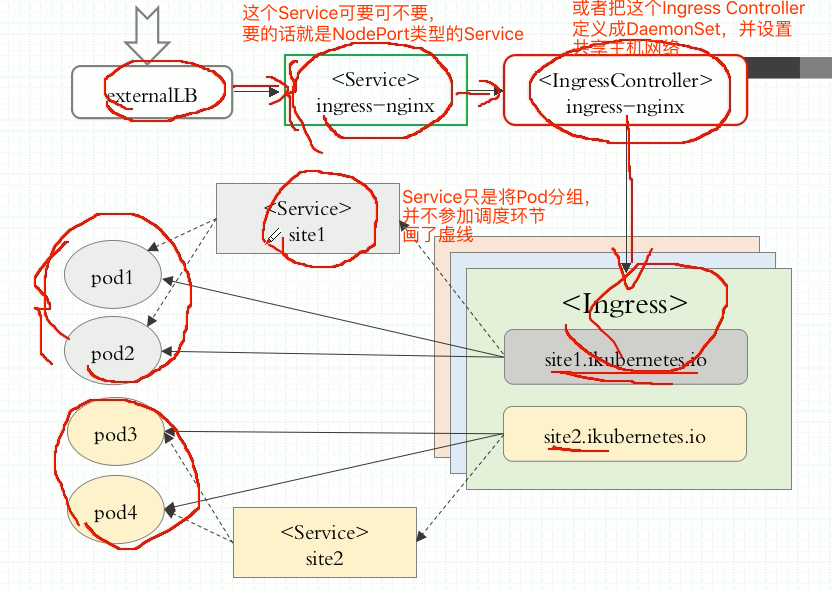

在Kubernetes集群中,后端被代理的资源,是不配置https的,是纯明文的http,但是我们使用一个独特的调度器或者叫Pod,对这个Pod来讲,它是一个运行在用户空间的应用程序,比如Nginx、traefik、haproxy、Envoy等。当用户试图访问某一服务时,我们不让这些请求先到达这些后端Pod的service,而是先到达这个Pod。这个Pod和service后的Pod是同一个网段的,所以可以让这个Pod和后端Pod直接通信,不要经过service,来完成反向代理。问题是这个Pod怎么接入外部流量呢?它需要NodePort类型的service。

客户端通过NodePort类型的service调度以后,与我们专门配置了https的Pod进行通信。但是等请求到达此Pod以后,由此Pod反向代理至后端Pod。所以这个Pod是https会话卸载器了。

访问任何一个节点的NodePort都能到达那个反向代理Pod,每一个节点都拥有Pod所在网络的地址,可以跟Pod直接通信。

但是这种模式调度次数太多了,集群外负载均衡调度到Node,Node调度到service,service调度到反向代理Pod,反向代理Pod调度到后端Pod。性能太差了。

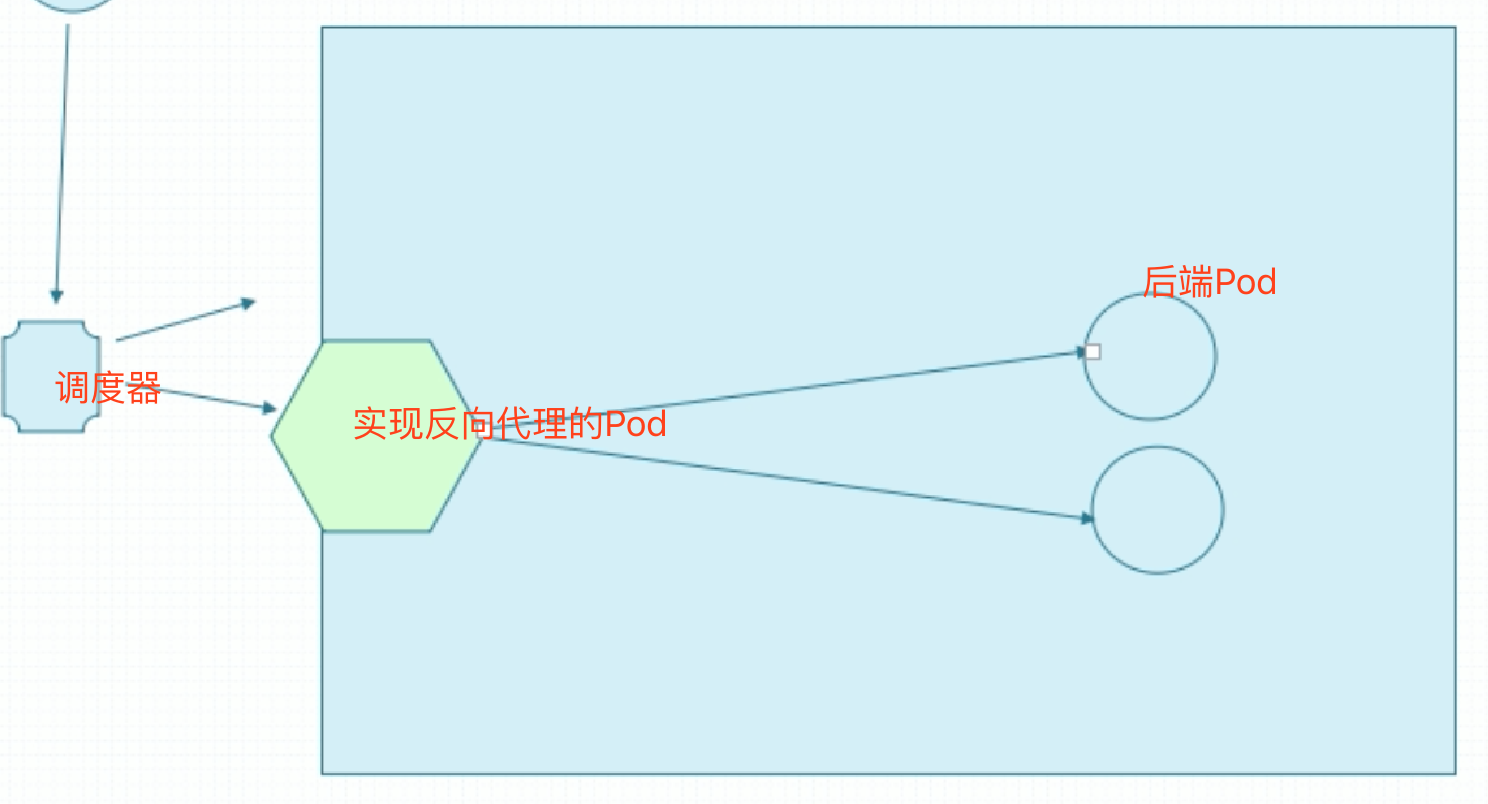

可以让实现反向代理的Pod直接共享节点的网络名称空间,也就是Pod监听着宿主机的地址。这样在集群外做个4层负载均衡,这个四层调度性能损失较少,然后到集群Pod了。

但是这样一来,这个Pod在每个node上只能运行1个了。一般来讲,这样的Pod,集群一般只运行1个,这个Pod运行在集群某个节点上就可以了。但是让这个Pod监听在节点的端口上,会有一个问题。使用Service时,用户访问任何一个节点的NodePort都行,但是目前这个Pod只运行1个,只监听在一个节点上。万一这个节点挂了呢?

使用DaemonSet,在每个节点上都运行,而且只运行1个这样的Pod。这样调度到任何一个节点都可以了。但是假如集群有非常多的节点,比如1000个节点,没必要在每个节点上都运行这样的Pod,DaemonSet可以实现在部分节点上运行。1000个节点拿出3个节点,打上污点,让别的Pod都调度不上来,我们定义这个DaemonSet控制下的Pod只允许运行在这3个节点上,并且能容忍这个污点。这3个节点专门用来接入集群外部的7层调度的流量。

而这个Pod在k8s当中有个专门的称呼,叫Ingress Controller。Ingress Controller是一个或一组Pod资源,它通常就是一个应用程序,这个应用程序就是拥有7层代理能力和调度能力的应用程序。目前k8s上的选择通常有4种:Nginx、Traefik、Envoy、HAProxy。最不受待见的就是HAProxy,但是现在在serviceMesh,服务网格中大家倾向的是Envoy。

将来在用Ingress Controller时,我们有3种选择:Nginx、Traefik、Envoy。去做微服务的,大家都比较倾向于Envoy。Traefik本身也是为微服务设计的,动态生成配置。Nginx是后来改造的。

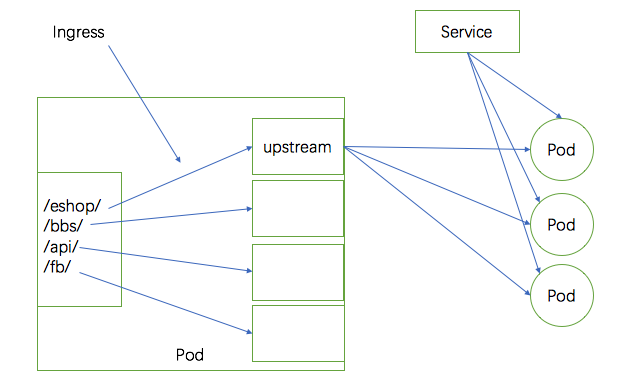

假设这个7层Pod调度多个后端服务,比如有一组Pod提供了电商服务,第二组Pod提供了社交服务,第三组服务提供了API服务,还有一组Pod提供的是论坛服务。这个7层Pod如果来调度这4组http服务。以Nginx为例,这是4组upstream,然后定义4个虚拟主机。假如说这个Pod只有1个IP地址,那么做4个虚拟主机只能根据4个主机名来做了。每个虚拟主机对应后端Pod。这4个虚拟主机要能提供公网能解析的域名。但是假如我们没有那么多主机名,就1个域名怎么办?url映射。每一个location映射不到不同的upstream。

Ingress

现在有个问题,Nginx中upstream中要指定有哪些主机的,现在后端不是主机,Pod是有生命周期的,随时有可能挂掉,起来一个新Pod,新Pod的IP地址就变了。另外后端的Pod可以动态升缩的,改一下,Pod就变多或变少了。

Service是怎么面对这个问题的:Service通过标签选择器关联标签来解决,而且Service始终watch着apiserver中的api,关心自己对应的资源是否发生变动。

这里Nginx怎么办?Nginx是运行在Pod中的,配置是在Pod内部的,upstream对应的Pod变动怎么办?同样的,Ingress Controller,也需要随时watch api当中的后端Pod资源的改变,怎么知道是哪些Pod资源呢?Ingress Controller自己没这个能力,它自己并不知道目前符合自己条件的Pod资源有哪些个,必须要借助于Service来实现。因此我们还是得建Service。每一个upstream服务器组要对应1个Service资源。但是这个Service不是被当做代理时的中间节点,它仅仅是帮忙分组的,知道找哪几个Pod就行了,调度时是不会走Service的。Pod一有变化,Service对应的也变了,这个变化怎么反应到配置文件中呢?依赖于一个专门的资源叫Ingress。Ingress和Ingress Controller是两回事,我们定义一个Ingress的时候要说明,期望Ingress Controller是如何给我们建一个前端,又给我们定义一个后端,就是upstream server,这个upstream server中有几个主机,Ingress是通过这个Service来知道的。

而且Ingress有一个特点,作为一个资源来讲,它可以直接通过边界注入到Ingress Controller里面来,注入并保存为配置文件。而且一旦Ingress发现Service选定的后端Pod发生改变,这个改变一定会及时反应到Ingress中,Ingress会及时注入到Ingress Controller中定义的Pod中,而且还能触发主容器的进程重载配置文件。但是Nginx并不是很适合这种场景,每次修改配置都得重载,traefik、Envoy天生就是为这种设计而生的,只要动了,它自己可以自动加载生效,不需要重载。或者说它可以自己监控着配置文件,改变了就自己重载了。

使用步骤:先是定义Ingress Controller这个Pod,然后去定义Ingress,而后在定义后端Pod生成service。

Ingress资源定义字段

|

|

一级字段依然是这5个。

|

|

官方文档中指明Ingress有很多种类型,要么是基于虚拟主机的,要么是url映射的。

Ingress Controller已经是作为k8s的附件了。整个k8s集群,有master、node和附件组成,一共有4个核心附件,DNS、heasper、dashboard、Ingress Controller。

创建Nginx Ingress Controller

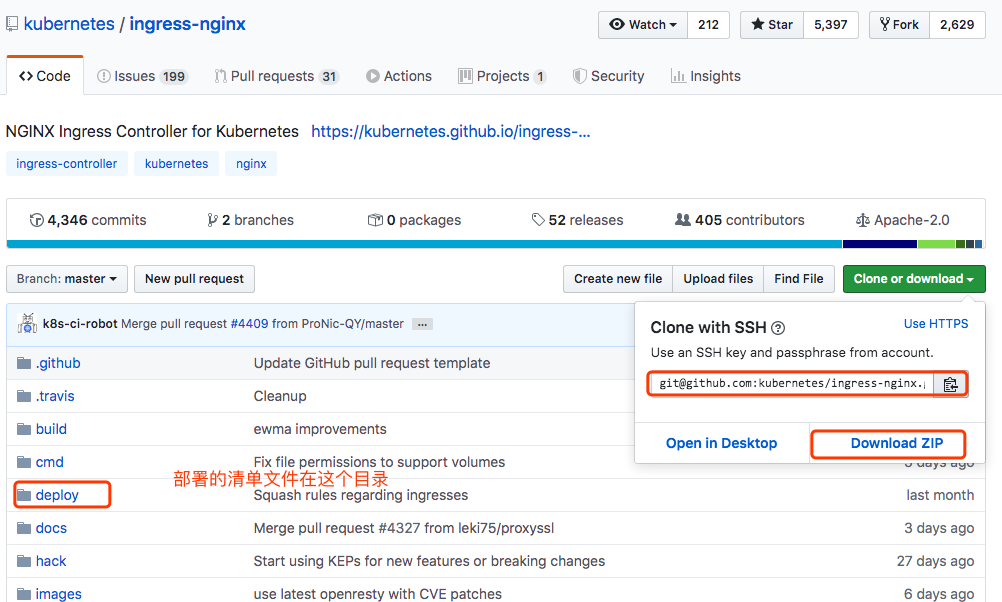

浏览器输入Kubernetes在github上网址,在这个下面可以找到一个项目叫“ingress-nginx”。点击进去:

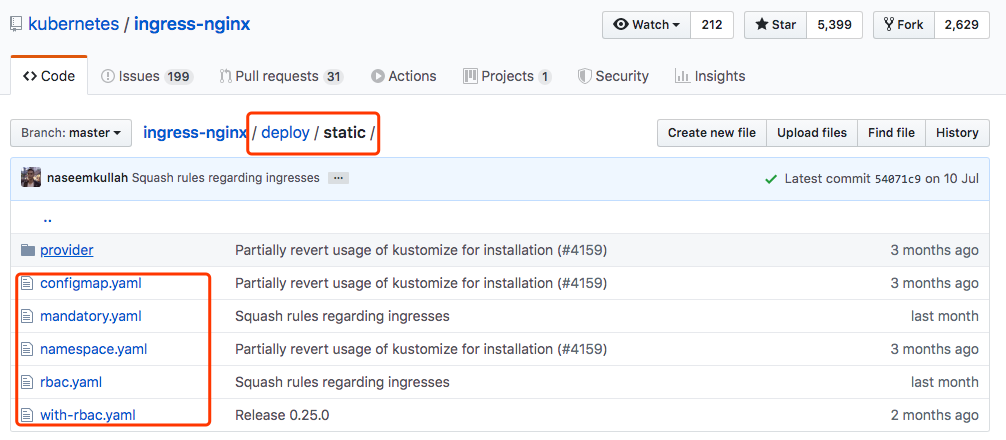

部署时只会用到deploy/static目录下的文件,我们可以把项目克隆下来,或者直接进deploy/static目录下载这些清单文件。

在部署前,可以先大概了解下,部署Nginx Ingress Controller需要部署哪些资源:

- namespace.yaml: Nginx Ingress Controller要部署在一个独立的namespace中,可以打开namespace.yaml中看下。

- configmap.yaml: 用到configMap,从外界为Nginx注入配置,可以查看configmap.yaml中的内容

- rbac.yaml: 我们使用kubeadm部署的k8s集群默认启用了rbac,这个rbac.yaml文件定义可一个ClusterRole,做一些授权,必要的让这个Ingress Controller拥有访问它本来到达不了的名称空间的权限

- with-rbac.yaml: 部署Ingress Controller的清单文件,带上了rbac

- mandatory.yaml: 这个清单文件是把所有资源都组合在了一个文件中。

下载yaml清单文件:

先创建namespace,其他的yaml文件执行顺序没关系:

创建其他资源:

|

|

这个Pod内的镜像在国内拉取速度非常慢,等了好久。

可以提前手动拉取:

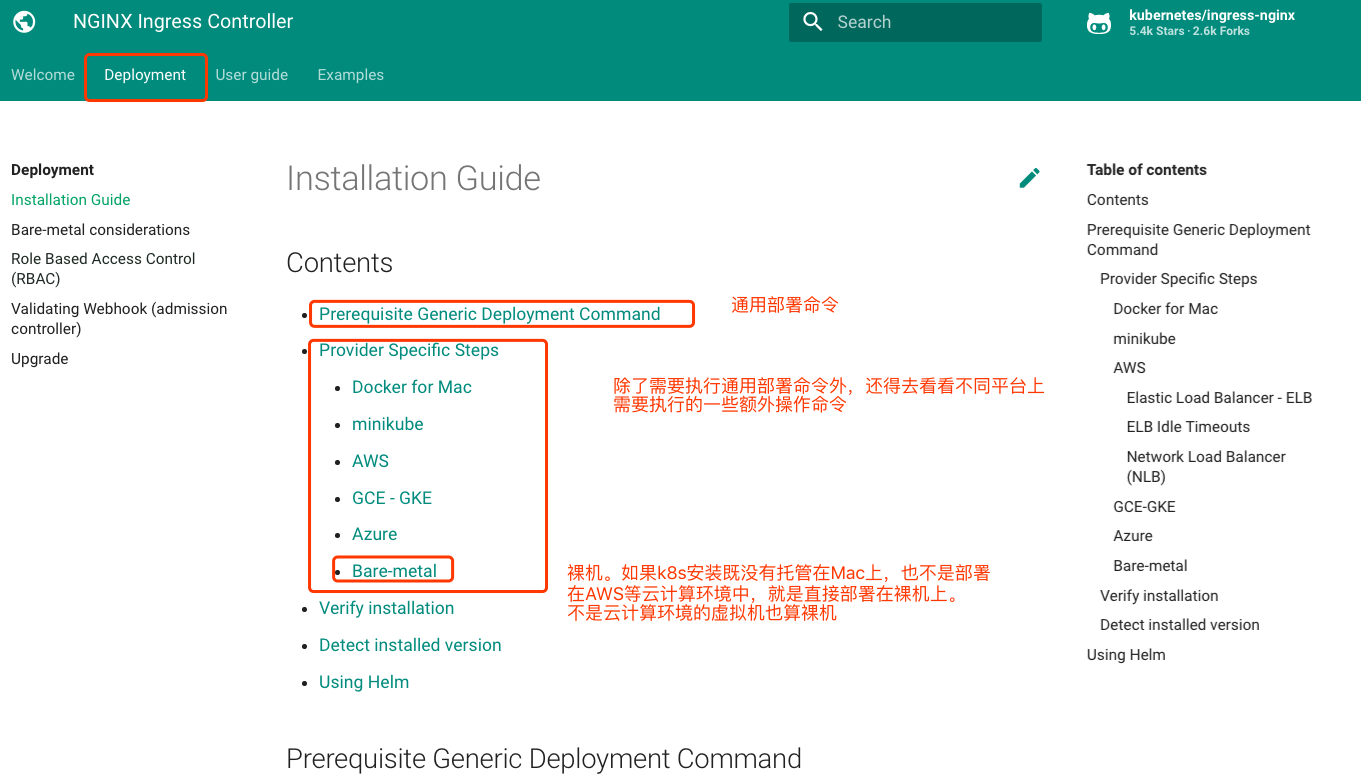

目前 Nginx Ingress Controller 已经有自己项目的官方站点了: https://kubernetes.github.io/ingress-nginx/

对于通用部署命令中,只需要执行一条命令即可:

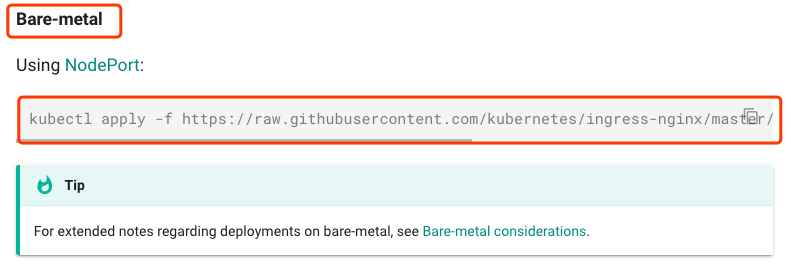

这个mandatory.yaml就是将所有需要的资源定义在了这一个清单文件中了。我这里部署在了虚拟机上,还需要看下裸机章节中需要执行什么额外操作:

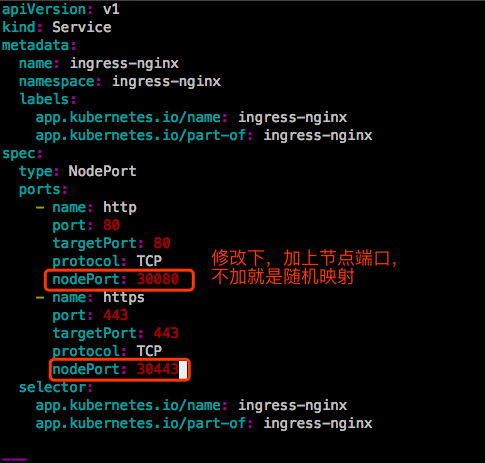

如果不加这个service nodeport,你会发现你的Ingress Controller部署完以后,只能在集群内部访问,因为Ingress Controller无法接入集群外部的流量,为了接入流量,我们得为它做一个NodePort类型的service。

有两种方案让Ingress Controller接入外部流量:

- 一是创建一个NodePort类型的Service,其标签选择器选中Ingress Controller这个Pod

- 二是部署Ingress Controller这个Pod时,设置其共享节点网络名称空间,这个方案需要我们在创建Ingress Controller前修改with-rbac.yaml文件。修改如下几个地方:

- 将一级字段kind的值从Deployment改为DaemonSet

- 将一级字段spec下的replicas字段删除

- 在spec.template.spec下加一个字段 hostNetwork: true

我这里选择第一种方案,那就还需要为Ingress Controller创建一个NodePort类型的service,不然无法在集群外访问。

创建Ingress

看看k8s官网上Ingress的定义示例,打开网址:https://kubernetes.io/docs/concepts/services-networking/ingress/ ,有个最简单的示例如下:

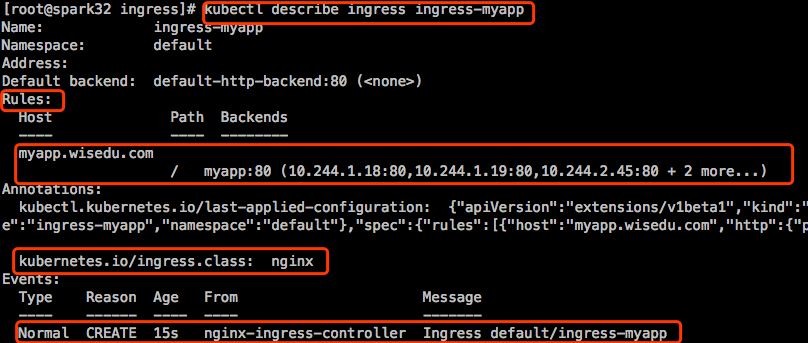

其中,annotations至关重要,必须要定义。在这个annotations中必须指明我们用的Ingress Controller类型是Nginx。

示例1

下面定义Ingress,将myapp-deploy里的Pod通过Nginx Ingress Controller向外提供服务。

已经有一个Deployment了,为这个Deployment创建个Service:

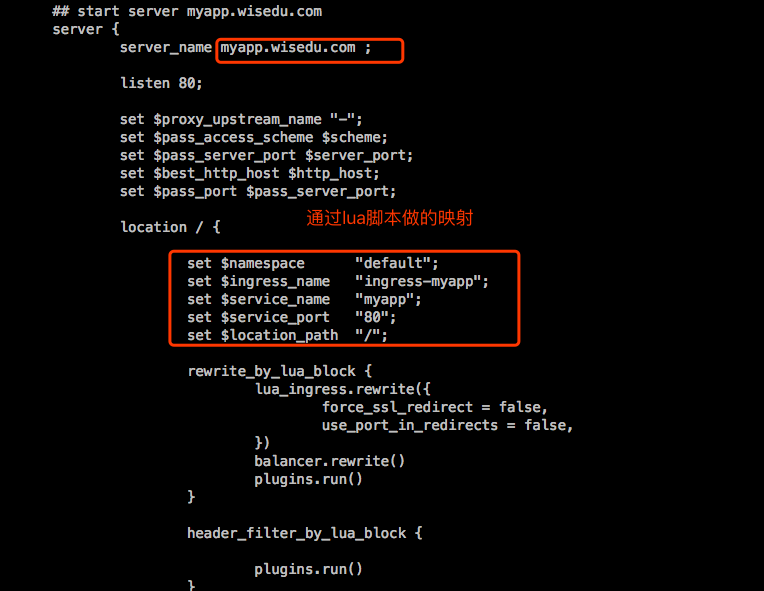

在定义Ingress时需要加一个注解,说明接下来要用到的Ingress Controller是Nginx,而不是Traefik和Envoy。要靠这个Annotation来识别的。他才能转换成对应的与Controller相匹配的规则。

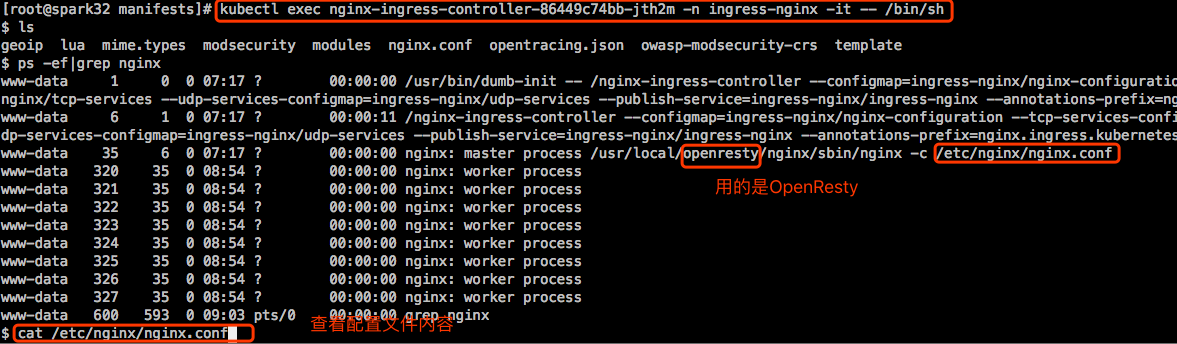

去看看配置有没有加到Nginx上:

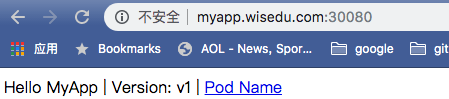

下面修改下客户端hosts文件,我这里修改自己笔记本的/etc/hosts文件,把myapp.wisedu.com这个域名配置解析成k8s集群中所有节点的IP地址,我这里node节点有3个:

打开浏览器访问:

示例2

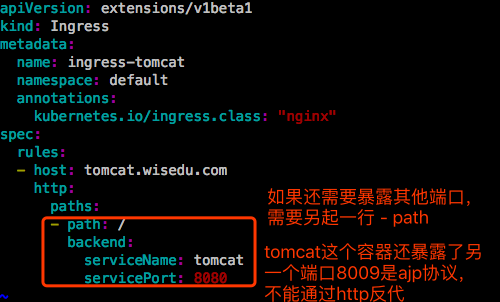

1、创建Deployment和Service(该示例中我使用的是headless Service)

|

|

2、创建Ingress

3、配置hosts文件

在要访问这个服务的客户端机器上配置hosts文件,我这里配置的是:

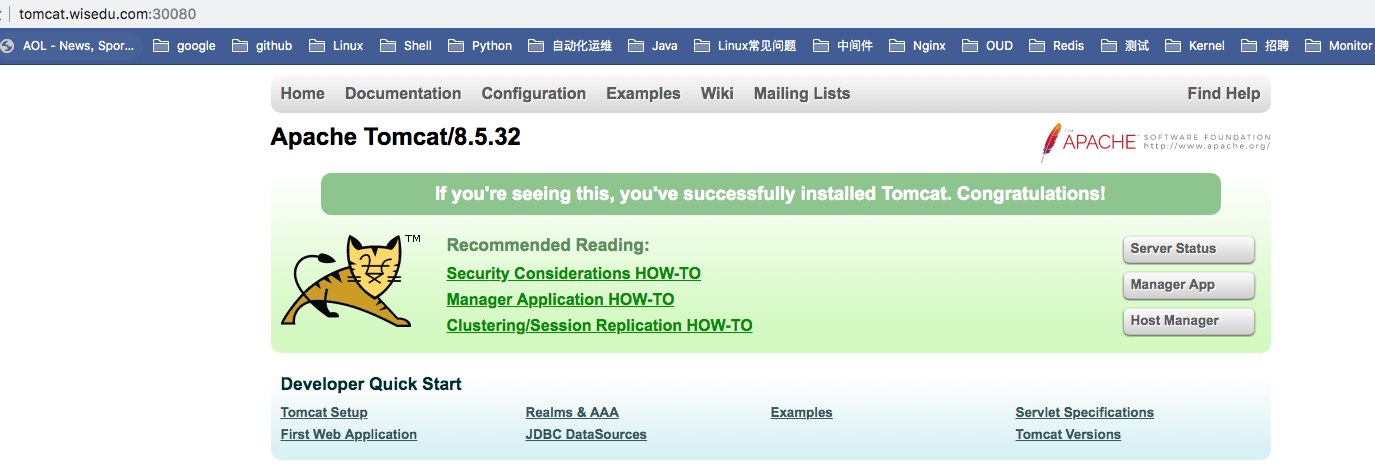

打开浏览器访问:

示例3:HTTPS

需要证书和私钥,而这个证书和私钥我们还需要创建为特定格式才能提供给Ingress。不是说做好证书贴到Nginx的Pod里面去就可以了,需要先转成特殊格式,这个特殊的格式叫secret。它也是个标准的k8s对象。secret也是被注入到Pod中,被Pod所引用。

这里就不做CA了,直接做一个自签发的证书。

1、生成私钥和自签发证书

2、创建secret

3、创建Ingress,包含这个secret

表示把哪个主机做成tls,并且用哪个secret来获取证书和私钥等相关信息。

|

|

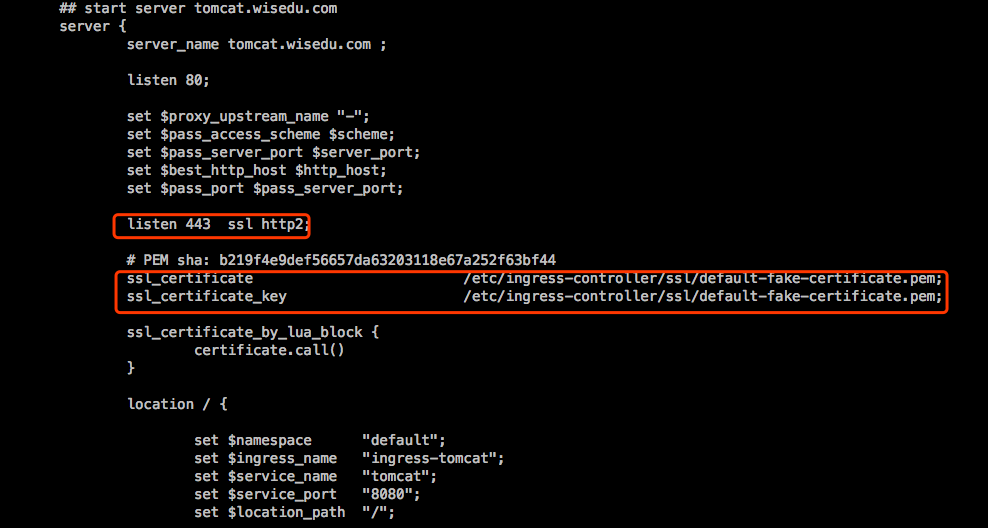

查看Nginx Ingress Controller中的配置:

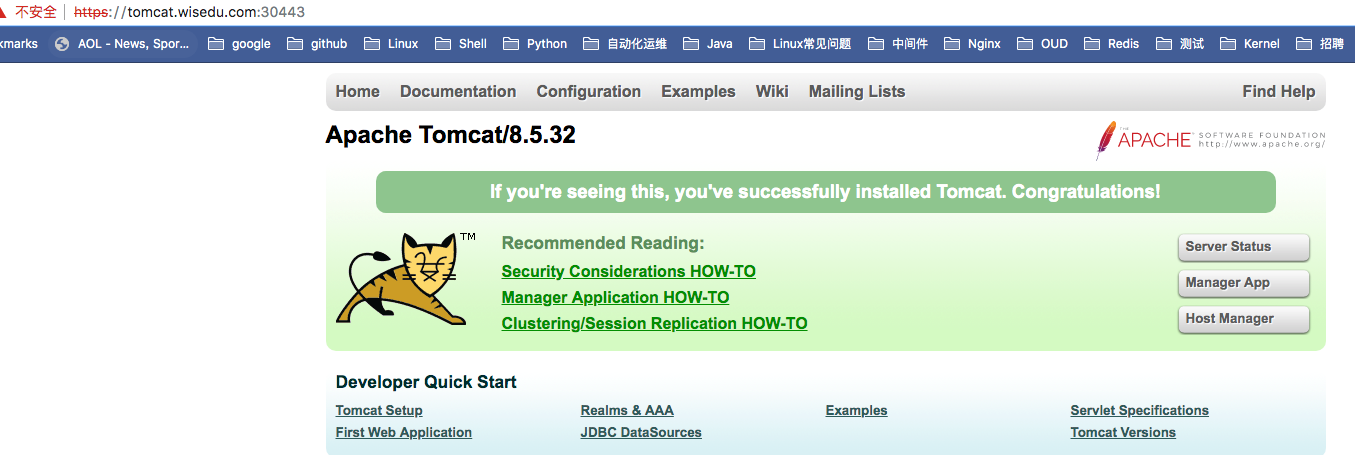

浏览器访问https: