常用的授权插件

RBAC

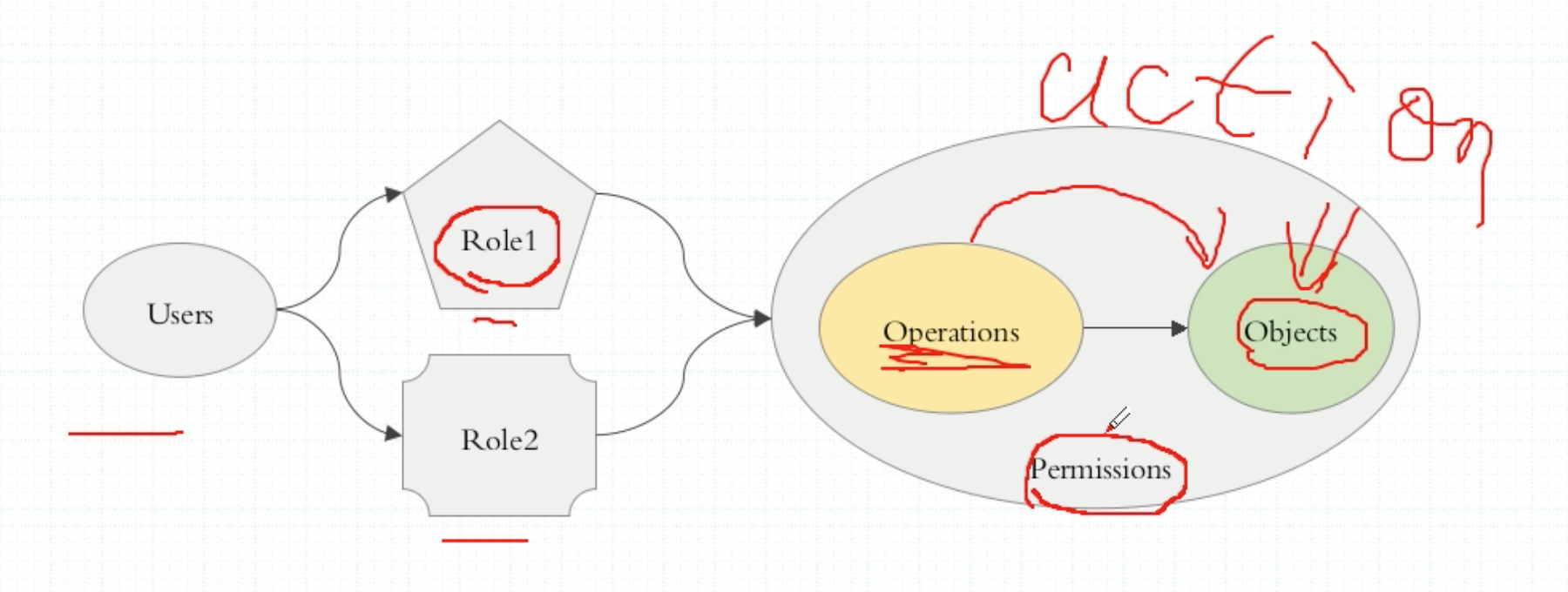

RBAC: Role-based AC

- 角色(role)

- 许可(permission)

所有的许可授权都添加在角色上,随后让用户去扮演这角色,所以用户就拥有了这个角色的权限。所以是基于角色的访问授权。

权限是怎么定义的呢?基于对象。k8s的所有内容可分为3类,第一个叫对象,占了绝大部分主体,第二部分叫对象列表,第三部分是一些虚拟对象,通常是一些url。第三部分很少,叫非对象资源。在restful风格中的所有的操作都是指在某个对象上施加的某种行为,称为operation。在一个对象上能够施加的操作组合起来,称为一个Permission。而后去授予某个role拥有某种或某些被许可的权限。

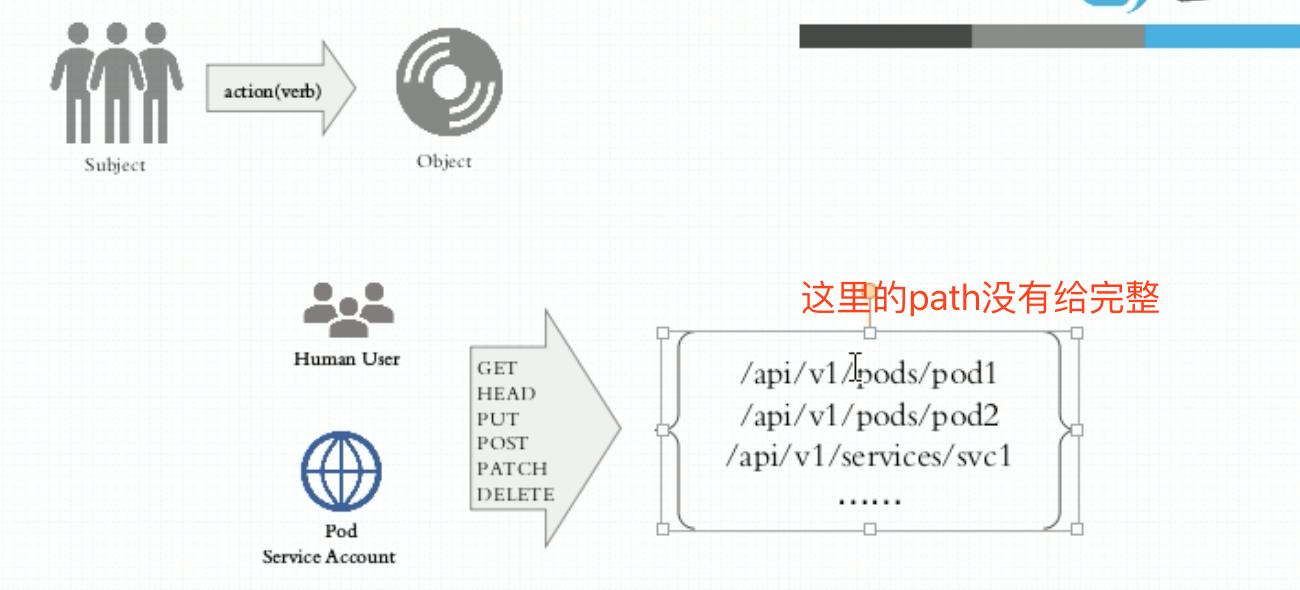

- subject:执行主体,分为Human user和 Pod serviceaccount。

- Object URL: 如果这个对象属于名称空间级别:

/apis// /namespaces/ / [/OBJECT_ID]/

RBAC的工作逻辑:

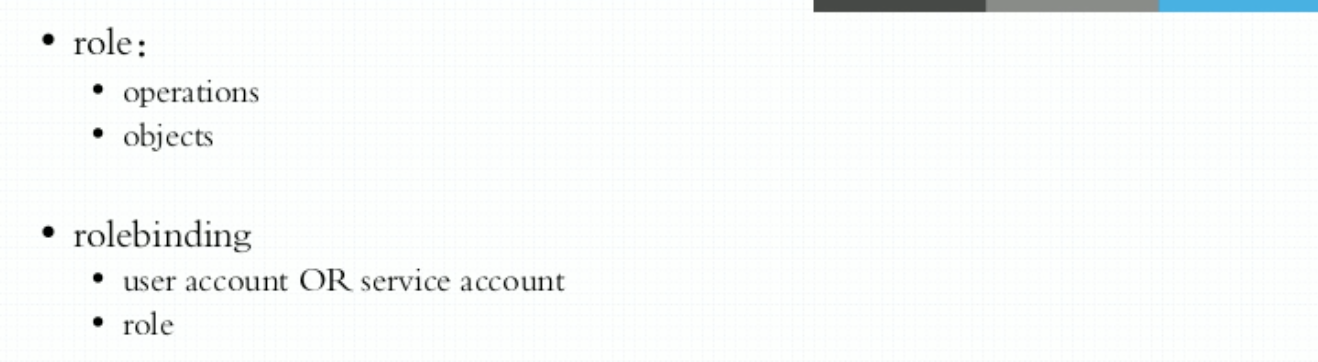

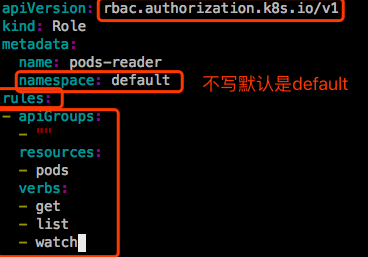

定义一个role,role是标准的k8s资源。role里面定义了如下东西:

- 1、operations

- 2、objects

没有拒绝权限,只有许可权限。

接下来定义一个用户账号,和role绑定,叫做rolebinding。

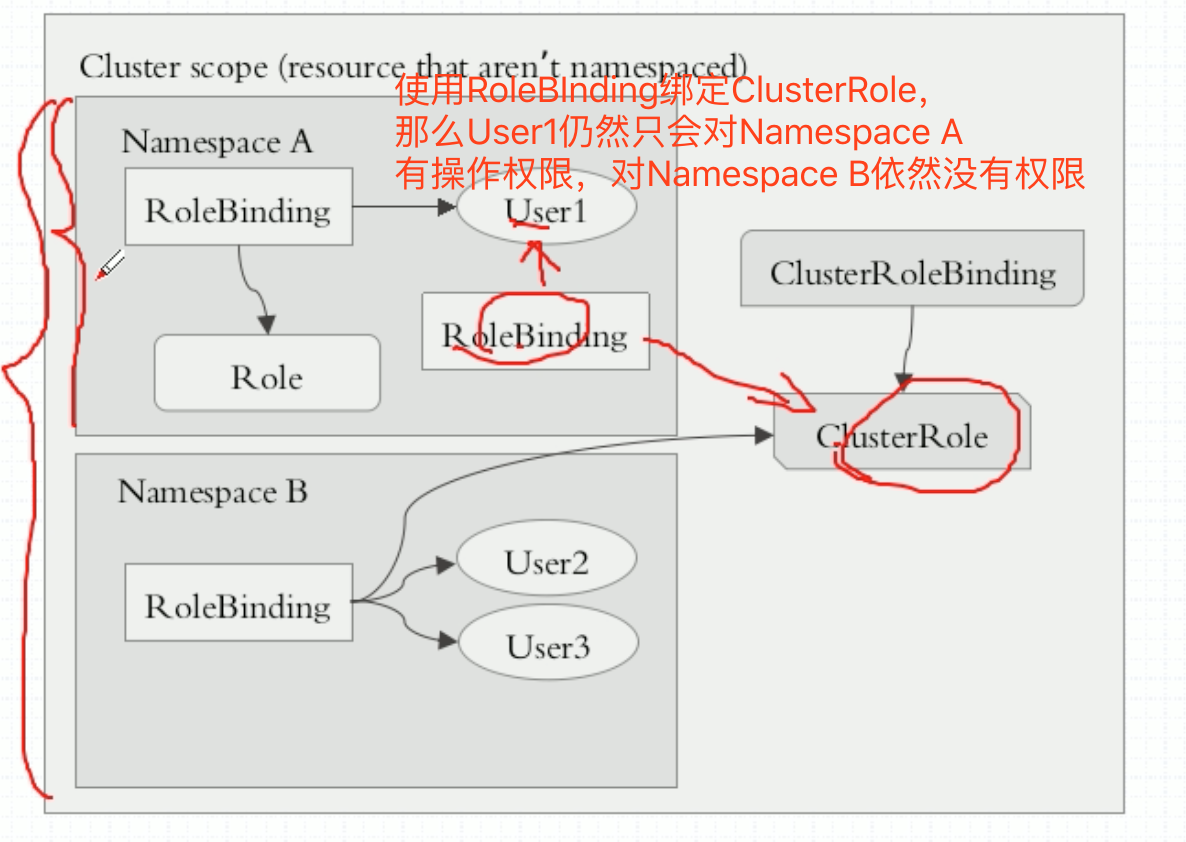

在k8s当中,资源分属两种级别,集群级别和名称空间级别。role和rolebinding是名称空间级别,授予这个名称空间范围内的许可权限的。除了role和rolebinding,还有clusterrole和clusterrolebinding,集群角色和集群角色绑定。它们两是集群级别生效的。

那么为什么这么设计呢?不允许RoleBinding绑定ClusterBinding不就好了吗?比如现在需要授权一个用户当前名称空间拥有管理权限,如果有10个名称空间,每个名称空间都有一个名称空间的管理员,应该怎么定义呢?在第一个名称空间中,定义一个Role,拥有管理权限,定义一个用户,做RoleBinding,每个空间都得定义一个拥有管理权限的Role。现在可以只定义一个ClusterRole,然后用RoleBinding去绑。

RBAC示例

|

|

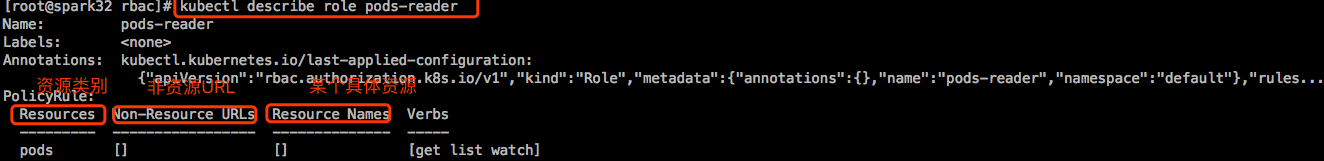

示例1:Role和RoleBinding

|

|

|

|

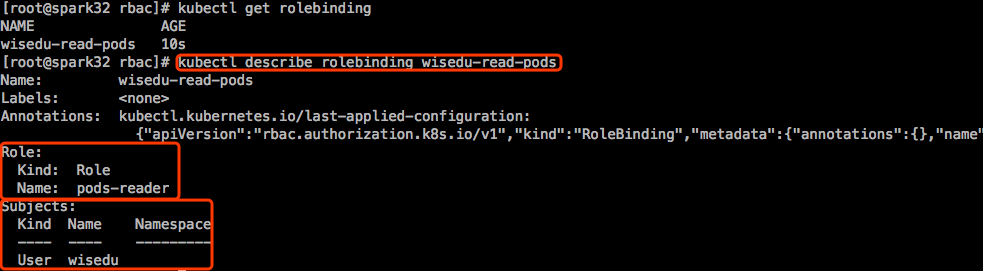

前一篇博文中创建了一个用户叫wisedu,他是没有权限去读Pod的。这个wisedu并不存在于k8s系统上,他只是个标识,当使用kubectl登录认证的时候,k8s就能识别他了,这个wisedu并不是我们手动创建的实际存在的用户,只是个标识。现在我们让wisedu扮演这个角色,而扮演这个角色需要RoleBinding。

–clusterrole=NAME|–role=NAME: 只能选择一个进行绑定。

|

|

接下来切换kubectl的当前上下文:

仅能查看default名称空间的Pods,其他名称空间的Pods依然没有权限看。

示例2:ClusterRole和ClusterRoleBinding

ClusterRole和Role的定义是一样的。ClusterRole是集群级别的资源,不能定义在名称空间里,所以不能定义字段namespace。下面创建一个ClusterRole。

先将kubectl切回管理员,否则创建不了资源。

创建完ClusterRole之后,利用ClusterRoleBinding把wisedu绑定在这个ClusterRole上,这样wisedu就可以查看所有名称空间的Pods了。但是需要先把之前的RoleBinding去掉。

切换用户,查看Pods:

此时,wisedu已经可以读取所有名称空间的Pods了。但是wisedu此时只有读权限,没有删除权限。

下面把ClusterRoleBinding删了,换成RoleBinding来绑定ClusterRole,来看看效果。这样wisedu只能读取RoleBinding所在名称空间的Pods资源。

|

|

系统内建的一些ClusterRole

系统上有两个非常重要的clusterrole:admin和cluster-admin。

admin

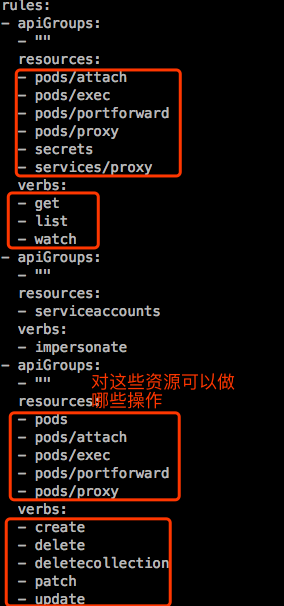

admin:为了一个特殊场景,就是上面描述的,每一个名称空间都需要一个管理员,只管理自己这一个名称空间,不用定义角色了,用RoleBinding引用这个clusterrole:admin。但这个admin不具备删除一些敏感资源,比如configMap之类的只能查看。

看看ClusterRole admin具有哪些权限:

admin测试:

admin这个cluserrole是可以删除pods的。

cluster-admin

k8s系统装完以后,默认kubectl的配置文件拥有管理所有名称空间的所有资源的权限。这是因为有个ClusterRole cluster-admin 和 ClusterRoleBinding cluster-admin。

|

|

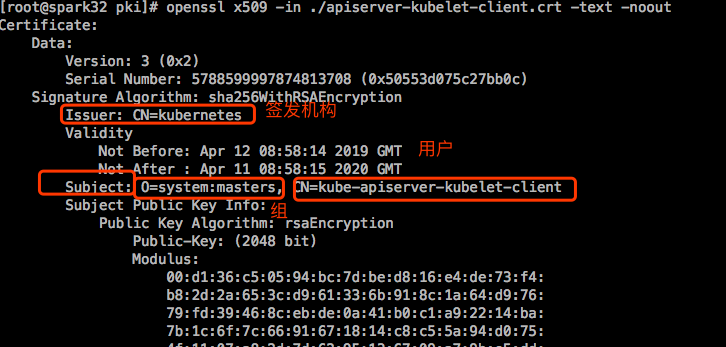

subjects(主体)是 system:masters,这是一个组。而这个组内有个用户叫 kubernetes-admin,这个用户属于组system:masters。为什么kubernetes-admin属于这个组内?这是在kubernetes-admin的证书中定义的。

kubernetes-admin对应的CN就是上图中的kube-apiserver-kubelet-client,虽然在kubectl config view中看到的是kubernetes-admin,但是k8s识别时是kube-apiserver-kubelet-client。以后我们定义证书时,也可以定义组,指定O就可以了。

在RBAC上进行授权时,可以绑定在三类组件上:

- 绑定在user上。RoleBinding和ClusterRoleBinding都可以绑定在user上

- 绑定在group上。绑定在group上时,表示授权这个组内的所有用户都可以扮演这个角色。

- 绑定在serviceaccount上。123Usage:kubectl create rolebinding NAME --clusterrole=NAME|--role=NAME [--user=username] [--group=groupname][--serviceaccount=namespace:serviceaccountname] [--dry-run] [options]

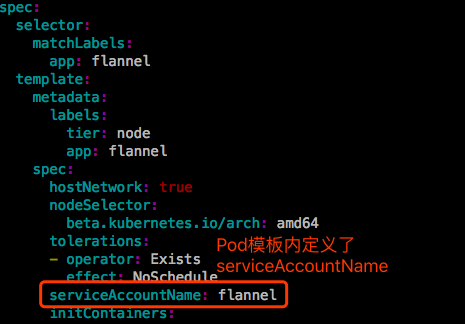

目前演示了前两种。绑定到serviceaccount,和前两种绑定没什么区别。而且一旦serviceaccount上做了RoleBinding或ClusterRoleBinding,也就意味着这个serviceaccount拥有了访问权限。任何一个Pod如果启动时以这个serviceaccountName作为它使用的serviceaccount的话,那么Pod中应用程序就拥有了这个serviceaccount所拥有的权限。

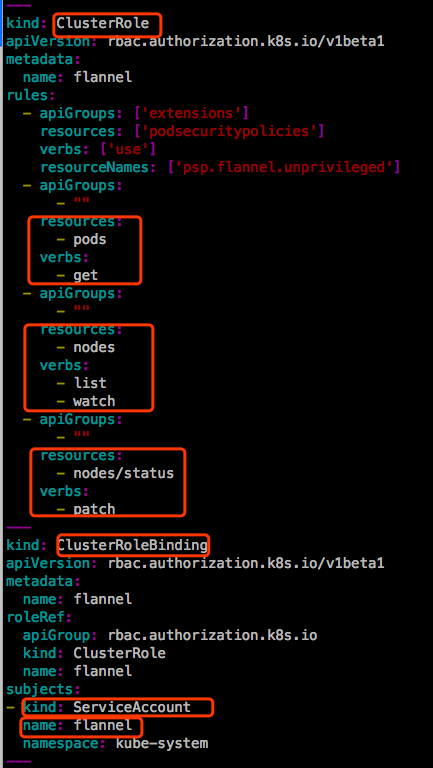

有一些Pod是需要管理员权限的,比如kube-system名称空间的一些Pods。一般都需要一些特殊的权限。比如flannel:

接下来与apiserver通信使用这个ClusterRole所具有的权限。

另外,准入控制一般很少操作,多数情况下都是RBAC,用户创建和授权。